The challenges of Data Science

The hard truths:

First, the majority of work that goes into conducting successful analyses lie in preprocessing data. Data is messy, and cleansing, munging, fusing, mushing and many other verbs are prerequisites for doing anything useful with it…A typical data (processing) pipeline requires spending far more time in feature engineering and selection than in choosing and writing algorithms. The Data Scientist must choose from a wide variety of potential features (data). Each comes with its own challenges in converting to vectors fit for machine learning algorithms. A system needs to support more flexible transformations than turning a 2-d array (tuple) of doubles into a mathematical model.

Second, iteration. Models typically require multiple passes/scans over the same data to reach convergence. Iteration is also crucial within the Data Scientist’s own workflow, usually the results of a query inform the next query that should run. Feature and algorithm selection, significance testing and finding the right hyper-parameters all require multiple experiments.

Third, implementation. Models need to be operationalised and become part of a production service and may need to be rebuilt periodically or even in real time – analytics in the ‘lab’ vs analytics in the ‘factory’. Exploratory analytics usually occurs in e.g. R, whereas production applications’ data pipelines are written in C++ or Java.

Enter Apache Spark

Spark is an open-source framework that combines an engine for distributed programs across clusters of machines with an elegant model for writing programs atop it.

Word Count

In this SCALA example, we build a dataset of (String, Int) pairs called counts and then save it to a file:

val counts = file.flatMap(line => line.split(” “))

.map(word => (word, 1))

.reduceByKey(_ + _)

counts.saveAsTextFile(“hdfs://…”)

One way to understand Spark is to compare it to its predecessor, MapReduce. Spark maintains MapReduce’s linear scalability and fault tolerance, but extends it in 3 important ways:

First, rather than relying on a rigid map-then-reduce format, Spark’s engine can execture a more general directed acyclic graph (DAG) of operators.

This means that (rather than MapReduce writing out intermediate results to the distributed filesystem), Spark can pass them directly to the next step in the pipeline.

http://www.dagitty.net/learn/graphs/index.html

Secondly, Spark has a rich set of transformations that enable users to express computation more naturally. Its streamlined API can represent complex pipelines in a few lines of code.

Thirdly, Spark leverages in-memory processing (rather than distributed disk). Developers can materialise any point in a processing pipeline into memory across the cluster, meaning that future steps that want to deal with the same dataset need not recompute it from disk.

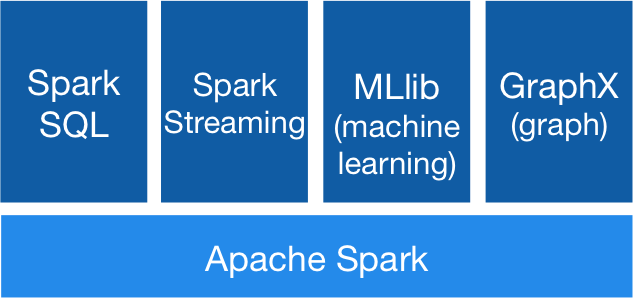

Perhaps most importantly, Spark’s collapsing of the full pipeline, from preprocessing to model evaluation, into a single programming environment can dramatically speed up productivity. By packaging an expressive programming model with a set of analytic libraries under REPL it avoids round trips and moving data back and forth. Spark spans the gap between lab and factory analytics, Spark is better at being an operational system than most exploratory systems and better for data exploration that the technologies commonly used in operational systems. Spark also has tremendous versatility offering functionality like SQL, being able to ingest data from streaming services like Flume & Kafka, it has machine learning tools and graph processing capabilities.